Benchmarking a JackRabbit

By joe

- 5 minutes read - 881 wordsThis is a modified version of a previous posting. We agreed to rewrite this post, eliding mention of a report we had taken issue with, and why we had taken issue with it. We will report JackRabbit benchmark data as we have measured it on our original system. Updated benchmark data, run files, and so on will be available from our site as soon as possible.

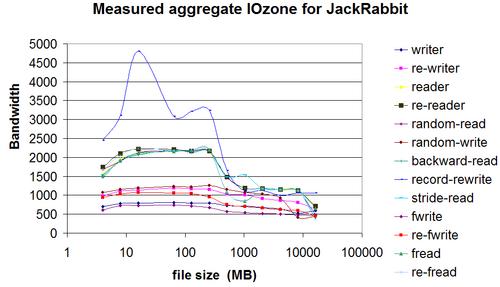

IOzone benchmarks were run and the results plotted.

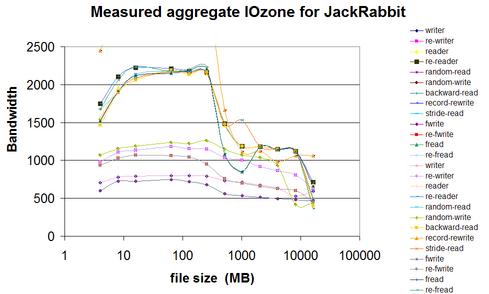

I magnified the y-axis to see more detail.

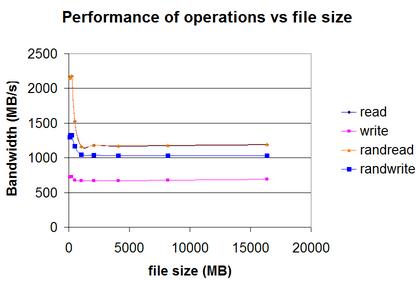

This is with an xfs file system. Ext3 results are quite a bit below this. This is a linux 2.6.17 kernel. Using a 4MB stripe size across 2 RAID6 units with 20 disks each. Unfortunately, even with the 4MB stripe size, the RAID cards were not hitting their maximum possible performance, so observed performance is 60-75% of maximum that we expected. Each adapter is capable of 750+MB/s in RAID6 to the disks. If we can feed each of the adapters enough work, we can get about 1500 MB/s in the RAID60. What we do see in practice is 600-680 MB/s per adapter for this example. Each adapter is on its own PCIe x8 link, so we aren’t running out of bandwidth. Our internal IOzone results suggest we are running on two adapters, albeit less than best efficiency. Later kernels (2.6.19) ought to enable us to better load balance between the two controllers. We will be testing this over the next few weeks. For laughs, while we were building this unit, we also ran the IOzone tests on a single adapter. We will post those numbers later. File operational tests were also plotted.

Please note that these results did not use a Redhat derived distribution. Current supported Redhat distributions use a 2.6.9 kernel, generally considered ancient in the Linux community, and lack support for important file systems such as xfs and jfs. The latter issues are marketing decisions, as Redhat, for better or worse, seems to be under the impression that ext3 is “good enough”. The former issue is also problematic, in that it requires either “backporting” existing drivers, and associated infrastructure, often introducing some rather interesting bugs, or doing without the feature. There are many IO fixes/tunings in later kernels. There is minimal support for newer chipsets (ala Socket 1207, and Socket 771/775) which was not available at the time of RHEL4’s launch. MSI and other bits don’t work well, and many kernel subsystems have been tuned, tweaked, fixed since 2.6.9’s release. As I write this, 2.6.20 has been released. We have done measurements on IO performance on other smaller versions of this machine. We loaded late model FC6, SuSE, Ubuntu, and RHEL4 onto the machine to test it, as well as OpenFiler. The performance penalty for using RHEL4 derived systems is at least 15-20% when using xfs. More when using ext3. We may quantify this in greater detail at some point later on if our customers request it. Note: this is with non-IOzone based workloads. Real application benchmarks are really the only thing that should matter to end users. The take home messages are quite simple: First: JackRabbit is a highly capable, extremely fast storage appliance in its default shipping configuration. Second: using RAID6, JackRabbit provides better reliability scenarios than RAID5+1. Third: While there is a performance penalty for using RAID6, it is in the 10% region (from other data we have generated) for most of the use and test cases. Fourth: JackRabbit is capable of sustaining huge I/O loads, often matching or besting wider striped systems with more controllers and lower RAID protection levels. Fifth: As an appliance, JackRabbit can be out of the box and functional in minutes, as we have done with several customers to date. Sixth: You can reload JackRabbit with a different OS, though some OS loads are known to provide a far lower performance than the default load. Sort of like running your car on something other than the design fuel. It might work, but it probably won’t perform as you wish. You can probably run a jet engine on something other than jet fuel, but the efficiency will be far lower. Wouldn’t it be terrible if someone loaded a jet plane with a non-design fuel for its two jet engines, and a propeller aircraft with many more engines “beat” it in a “fair” test? (scare quotes there on purpose). Finally a note on benchmarks. The only ones that matter are the real application tests. Many groups have requested we run IOzone on 2x and 4x ram size so they could see the raw speed of the disks. Their use cases are typically 1/10->1/4 ram at best, so the IOzone tests they requested are meaningless for decision making; they are far outside what those end users should expect for real use cases. Benchmarks can be gamed, we see it from many groups, and have been approached and asked to do this once (we refused) ourselves. If a vendor tells you that you are using something in a non-optimal manner, there may be a good reason that they are telling you this. It is in their interest to see you get the best performance out of your system. It is decidedly not in their best interest to see a system de-tuned and compared.